Hi,

I will provide weekly updates on my GSoC project in this thread. Previous discussions on this project can be found here.

Project : Confusion Pair Correction Using Sequence to Sequence Models

Mentor : @jaumeortola

Hi,

I will provide weekly updates on my GSoC project in this thread. Previous discussions on this project can be found here.

Project : Confusion Pair Correction Using Sequence to Sequence Models

Mentor : @jaumeortola

[Update May 15th]

-> Created training data that covers instances of all confusion pairs.

-> Dataset contains 12,750,694 sentences out of which 921,997 are correct sentences and the rest are incorrect variations of the correct ones.

-> After doing some research, I figured i’ll use FastText embeddings rather than GloVe embeddings (Although I would like to do a comparison of FastText vs GloVe performance if time permits.)

Target for the next update

-> preprocessing for seq-to-seq model

-> Coding up the seq-to-seq model with bi-directionals LSTM for the encoder.

[Update May 18th]

-> Implemented preprocessing.

-> Implemented bi-directional encoder.

-> Going to start training the model.

Problems

-> What is the max input length of the sentence that LT takes? Right now im configuring the model to support max length = 20

-> While the model is training, I want to start working on the integration of the model to LT. I will use the DeepLearning4j library in Java for this. What would be the entry point of my system in the LT codebase? Need some help with that.

There is no such a limit in LT. Sentences can be of any length. You can loop over every token in a sentence, and look at the 10 tokens before and 10 tokens after. That sounds reasonable. You can adjust these parameters later after testing.

You will have to look carefully if there is any discrepancy between LanguageTool word tokenization and what you expect it to be. For example, currently in LT “you’re” is represented with three tokens. LT analysis can be seen here. If you need another tokenization, you can just join the whole sentence and split it again.

The entry point will be what we call a “Java rule”. Here you have a simple demo rule.

In a Java rule you can loop over the tokens of a sentence. Each token has a word form, and one or more lemmas and POS tags. If the token has been properly disambiguated, there is only one lemma and one POS tag, but that is not always possible or desirable. I guess you plan to use only word forms. But the other parameters could be useful. I will write more about this.

As a result, the Java rule returns an array of matches (possible errors). See here.

You will probably need an abstract Java rule plus another rule for each language (English, German, etc.) with the language data. See some abstract rules here and some English implementations here.

[Update June 2nd]

→ Finished training the model (English Version 1.0) on the tatoeba dataset

→ Git repo : GitHub - drexjojo/confusion_pair_correction

→ The data and the pickle files are not uploaded on github as they are too large for github

→ Started training the model on the British National Corpus

[Update June 13th]

→ Model trained on the BNC corpus. The git repo have been updated.

→ Regarding DL4J integration, I have raised an issue on their git and they are looking into it.

EVALUATION DETAILS

On 300 sentences of seen (trained) data :

Precision : 0.96327716754214391

Recall : 0.9012584882220365

On 300 sentences of unseen data from the europarl corpus:

Precision : 0.8451816745655608

Recall : 0.8143074581430746

Avg Time taken per sentences : 0.12467655142826432 seconds

I expect the results to improve once I have trained it on the europarl corpus.

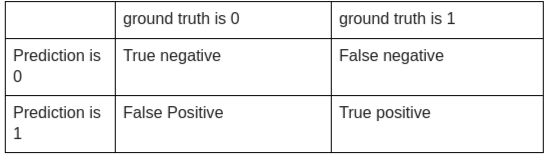

The output of my system is a sequence of 0s and 1s

I also have a ground truth sequence of 0s and 1s

Here is how I have defined my metrics.

Precision = TP/TP + FP

Recall = TP/TP+FN

Thanks for the numbers. Sorry if this has been discussed before, but will there be an easy way to tune for better precision?

There are a few thing I want to try out that will improve the results :

Thanks. I didn’t only mean general improvement, but is there an easy way to tune for precision, accepting a lower recall? We do that with our ngram approach by changing a threshold and it helps to avoid false alarms.

I’m not exactly sure on how to do that. Are you saying in your ngram approach you kept a threshold that gave fewer False Positives? I will check it out

As Daniel said, it would be preferable to have more precision (fewer false positives), even at the expense of less recall (more false negatives). Let’s see if that is possible.

Your test set should be larger to find more interesting information.

I have tested some thousand correct sentences from the Europarl corpus, containing some words in the pair list (either, bounds, man…). Around 13% of the sentences give a false positive. We should try to minimize this number of false alarms.

Strangely, some false positives are related to words not present in the list. Is that possible? Could we simply ignore these positives? I’m not sure if we have talked about this before.

For example, this false positive is for the word “as”, but “as” is not in the list, or is it?

['I', 'would', 'stress', 'here', 'quite', 'clearly', 'and', 'unequivocally', 'that', ',', 'as', 'far', 'as', 'the', 'objectives', 'are', 'concerned', 'and', 'the', 'need', 'to', 'provide', 'the', 'necessary', 'funds', 'for', 'them', ',', 'there', 'is', 'no', 'dissent', 'whatsoever', '.'] 000000000010000000000

This is for “would”, but “would” is not in the list:

['I', 'would', 'point', 'out', ',', 'in', 'any', 'case', ',', 'that', 'one', 'of', 'the', 'organisations', 'with', 'which', 'Osama', 'bin', 'Laden', 'is', 'linked', 'is', 'called', 'the', "'", 'International', 'Front', 'for', 'Fighting', 'Jews', 'and', 'Crusades', "'", '.'] 010000000000000000000

I attach a file with more examples like these: false-positives-examples.txt.zip (45.0 KB)

Could you publish a plain text version of the list of confusion pairs you are using so we can see it?

The selected confusion pairs : https://github.com/drexjojo/confusion_pair_correction/blob/master/text_files/selected_cps.txt

Not selected : https://github.com/drexjojo/confusion_pair_correction/blob/master/text_files/not_selected_cps.txt

“as” was in the confusion pairs list but I had removed it before training the model.

“would” is not there in the confusion pairs.

These cases will be ignored. When a ‘1’ is encountered, the system will do a lookup in the confusion_dict to find its corresponding confusion word. But in the case of “as” it finds that “as” is not in the confusion dict and hence does not change it. But this is a sign that the model should be improved, it’s not supposed to tag non-confusion words as ‘1’.

@dnaber the french confusion pairs found here have only ~50 confusion pairs compared to ~700 for english. Shall I train the model with just these pairs or is there any way I can get more to train on?

You could try org.languagetool.dev.wordsimilarity.SimilarWordFinder to automatically find similar words. Or find lists of commonly confused words on the web. But starting with those ~50 pairs would also be fine.

[Update July 11th]

I have streamlined the code for the training process. Instruction on how to run is added to the Git repo.

Regarding the French confusion pair correction: So far I have trained 6 different version of the model but the results produced are not good. This is because the number of confusion pairs in French is less as compared to that in English. So the number of incorrect sentences formed <<< number of correct sentences.

I have fixed the problem now by using (Tatoeba + europarl + French News Crawl Articles 2013 + French News Crawl Articles 2008) and removing sentences which doesn’t have a confusion word (with a probability of 1/10). The model is being trained now.

[Update July 25th]

-> There was a tokenization problem in the French model. Most predictions were correct but offset by a few places. Fixed it and started training it again yesterday.

-> The training and the evaluation have been streamlined so it would be easy to train and evaluate new languages. The instructions have been added to the git repo.

-> @jaumeortola and I will begin working on the integration of the models into the LT codebase.

-> Been reading up on ways to increase precision with less recall. Will try out some methods and report back by Saturday.

[Update July 30th]

I have finished the evaluations for French. The results do not look good. I have analyzed the results and found that, in cases where there are only one incorrect use of a word, the model performs well enough but predictions become wayward when there are more errors.

Total no. of (correct + incorrect) sentences : 2,276,775 (French News crawl 2007 dataset)

true_positives : 1555726

true_negatives : 29600776

false_positives : 692309

false_negatives : 699855

Precision : 0.692038157768896

Recall : 0.6897229582976625

Avg Time taken per sentences : 0.03798736967187384

There seems to the problem of index offsets.

input : Mais pour se dernier , cette procédure qui facilite la vie numérique à du plomb dent l ’ aile .

prediction : [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 3, 0]

ground truth : [0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0]

input : Mais pour ce dernier , sept procédure qui facilite la vie numérique à du plomb dent l ’ aile .

prediction : [0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 3, 0]

ground truth : [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0]

input : Mais pour se dernier , sept procédure qui facilite la vie numérique à du plomb dent l ’ aile .

prediction : [1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 3, 0]

ground truth : [0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0,

There is an offset of 2 positions in the above cases. The tokenization problem have been fixed. I have triple checked the code and found that there are no mistakes. I am unable to figure out why this offset problem is happening.

I am beginning to think the entire approach is fundamentally wrong. Shall I try different methods? I have a few ideas I want to try out. @dnaber what do you think?

I’m not sure I understand, could you provide an example? Also, what pairs does the evaluation refer to? Is it the average precision and recall for all French confusion pairs? If so, how do precision and recall look when considering each pair on its own?

Please post more about that. You and @jaumeortola should decide together on how to spend the remaining time of GSoC.

It works reasonably well for cases where there is only one error present.

Input : Le chef libéral Michael Ignatieff à également tenu à rendre hommage à Serge Marcil .

prediction : [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 3, 3, 3]

ground truth : [0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0]

But there is an index offset occurring when there are more than one error words present.

input : Mais pour se dernier , cette procédure qui facilite la vie numérique à du plomb dent l ’ aile .

prediction : [1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 3, 0]

ground truth : [0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0]

Yes the evaluation was done for all confusion pairs in french. It is the average precision and recall of all the pairs.

I have not done the pair-wise eval as of yet.

Okay. I’ll discuss it with @jaumeortola